More on domain values from me this week.

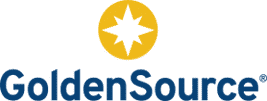

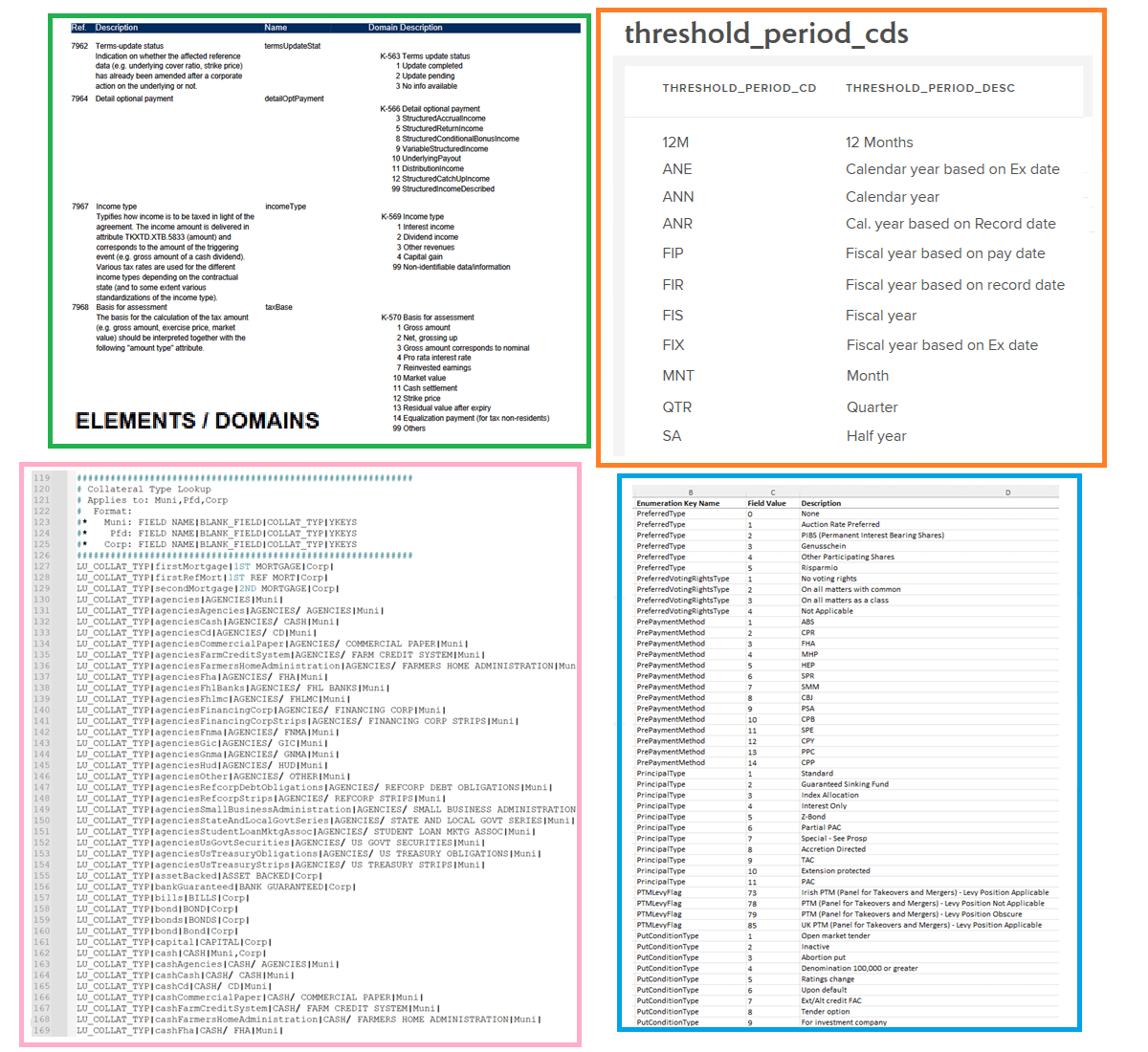

Just to recap, domain values – a.k.a. ‘enumerated values’ – are the allowable set of descriptors given to a specific attribute. They describe certain characteristics of financial instruments, legal entities, corporate actions, and so on.

Domain values need to be treated differently than other kinds of datasets.

If you’re onboarding data for research only, you can simply bring it into your data warehouse, and there isn’t too much to think about. Whether a data point is the security’s maturity date (a calendar date), its nominal amount (a number with a currency), its issuer’s name (plain text), or its type of guarantor (a domain’ed attribute), even if there is unexpected – or worst case, invalid – information, the harm is relatively minimal.

But if the data is meant to drive, say, a trading system, a portfolio management system, regulatory reporting, or a valuations engine – just to name a few – all the data you’re onboarding needs to be prudently mastered. Otherwise, the result will be sheer havoc: loss of data, missed opportunities or incorrect reporting. It’s absolutely essential to have a strong handle on those domain values.

All of which means that one needs to be able to check whether a particular domain value is truly within the set of values this attribute is supposed to have.

It’s the same as having to validate, say, a number range.

However, what’s different about domain values (such as warrant exercise terms or the trading restriction type) is that each and every possible value at any given point in time must be known and catered for in advance.

Domain values are essentially long lists of well-defined values – but those lists are not stable forever. In fact, they change – typically, by being expanded – more frequently than one would expect.

To stay on top of this continual change requires close cooperation with the sources of that data, mostly external data vendors. If you don’t maintain that level of cooperation with the vendors, you will not become aware of changes ahead of time and will only find out through errors and exceptions after the fact. For example, there may be a new instrument, which requires knowing what granular type it is, how it needs to be treated throughout the processing eco-system, what calculations need to be applied to it, and into which user’s queue or to which consuming system it is eventually to be passed.

This is where data mastering becomes so essential, as it provide you with full awareness of upcoming changes to those domain value sets, with no interruptions or miscalculations.