Although you clean and validate daily data feeds, data at rest degrades over time. And data generated inside your business might not have gone through rigorous cleansing before being distributed and stored. With multiple instrument masters in which your data resides, unmasking and improving overall data quality is perceived as extremely difficult. But it’s no longer a daunting prospect, with a proven methodology and the right technology to hand.

Data quality is the foundation for most significant business or operating model improvements. Insightful institutions are formalizing their data quality practices, putting in place a continuous improvement framework as an enabler for better business and smoother change.

When all data can be compared and quality-tested in a consistent way on a daily basis, clarity and objectivity is achieved. Also, the priority for making improvements is clearly exposed and communicated to the data owners.

In addition to dashboards showing data quality improvements over time, which is key for proving the ROI of your initiatives, data quality metrics, e.g. by asset class, can drill down to the individual errors and exceptions that need to be fixed. Data quality can also be analyzed by types of quality, e.g.:

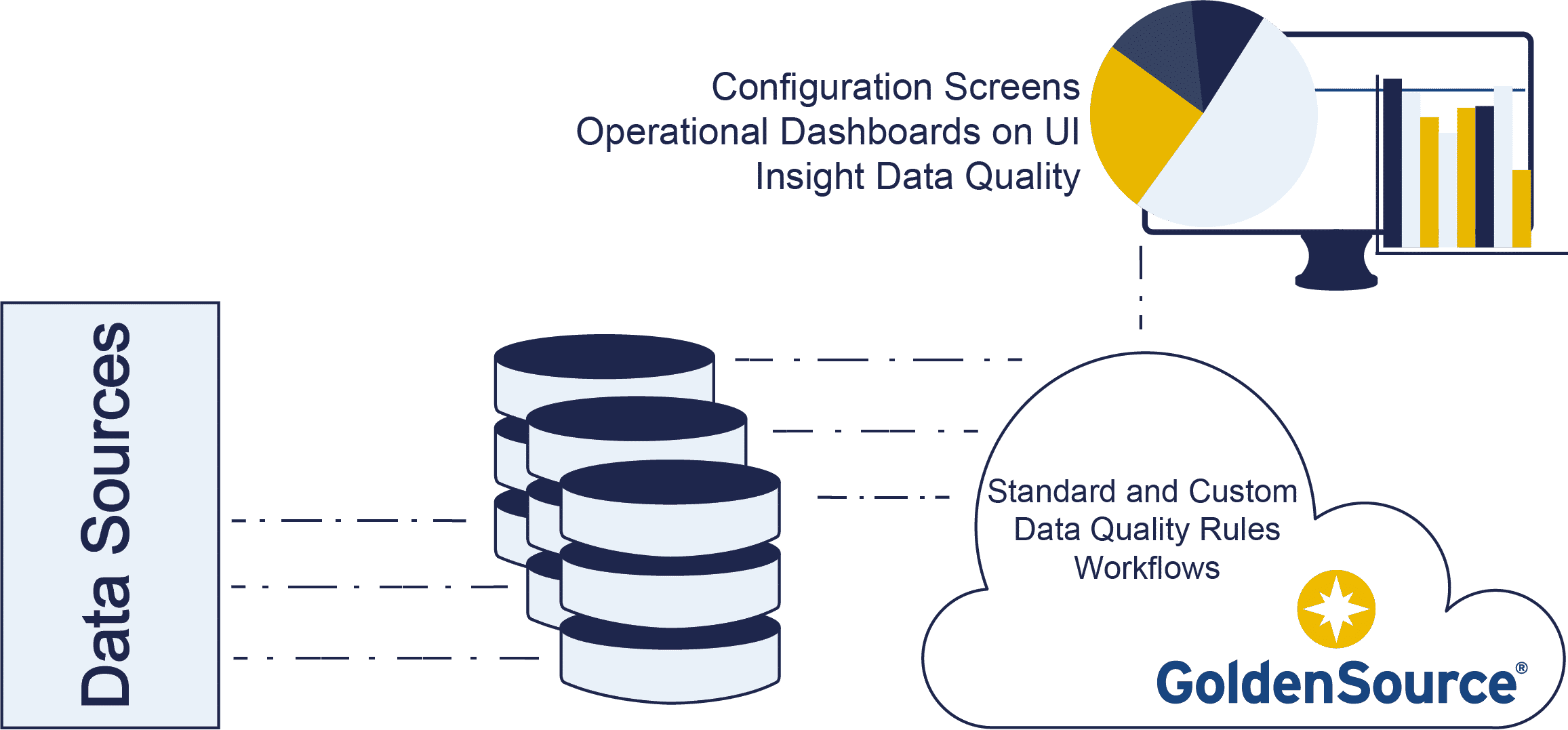

We work with your team to identify the critical data elements (CDE) from among your data stores across securities reference, clients and counterparties, accounts and products. Our out-of-the-box rules accelerate and de-risk the initial phases of your data quality project, ensuring rapid time to value. With weightings applied to rules and CDEs, when you see the reports it’ll be clear which issues need to be fixed first. And you can easily introduce custom rules to suit your particular processes or data types, ensuring maximum value across your business.

Whether you’re dealing with tens of thousands or tens of millions of instruments, the solution is proven. At first glance it doesn’t seem complicated to run a rule against a set of data. But compound that to multiple, complex, hierarchical rules, and expand that to large sets of data, every day. There’s also the processing power to do it and the optimization required at a database level.

We give you the ability to look past those things and, as operations users and business users, build out your data quality competence.

![]() Standardize content from multiple external sources with maintained, off-the-shelf connections to leading financial data vendors such as Bloomberg, Refinitiv, ICE and many more. Read more…

Standardize content from multiple external sources with maintained, off-the-shelf connections to leading financial data vendors such as Bloomberg, Refinitiv, ICE and many more. Read more…

![]() GoldenSource Customer Master module acts as a global entity master linking customers and counterparties, along with employees, branches, contacts, addresses, accounts and products. Read more…

GoldenSource Customer Master module acts as a global entity master linking customers and counterparties, along with employees, branches, contacts, addresses, accounts and products. Read more…

![]() The only Master Data Management (MDM) platform to manage instrument, counterparty and issuer data on a single platform. Reduce risk and achieve competitive advantage. Read more…

The only Master Data Management (MDM) platform to manage instrument, counterparty and issuer data on a single platform. Reduce risk and achieve competitive advantage. Read more…

![]() GoldenSource Product Master meets the challenge of efficient sourcing, onboarding, cross-referencing and distribution of product & fund information from diverse sources and systems. Read more…

GoldenSource Product Master meets the challenge of efficient sourcing, onboarding, cross-referencing and distribution of product & fund information from diverse sources and systems. Read more…

Data Quality Process